1. Furhat Robot

A social robot for research and innovation by Furhat Robotics

The Furhat robot consists of a base containing a loudspeaker on which a movable head is mounted. A projector is embedded in the articulated head, which projects a face onto a translucent frosted glass surface. Through this mechanism, the robot is able to project different facial expressions by dynamically manipulating the eyes and mouth to simulate the appearance of speech.

The ZHAW Cetre for AI used this robot for research in the field of human-robot interaction. Today, it is primary used in educational settings, serving to elucidate to a diverse audience the principles of artificial intelligence, encompassing the functioning of technologies like speech-to-text, text-to-speech, and natural language understanding.

2. Electroencephalography

Electroencephalography (EEG) is a non-invasive neuroimaging technique that measures the electrical activity of the brain through electrodes placed on the scalp.

EEG records the collective neural signals, providing insights into brain function and dynamics in real-time. In the realm of artificial intelligence (AI), EEG holds significant promise for applications ranging from brain-computer interfaces to cognitive computing. Researchers leverage EEG data to decode mental states, assess cognitive workload, and even control devices through brain signals. The integration of EEG with AI algorithms enables the development of innovative solutions for neurofeedback, mental health monitoring, and enhancing human-machine interactions. By bridging the gap between neuroscience and AI, EEG contributes to the advancement of technologies that can understand and respond to human cognitive processes, paving the way for new possibilities in healthcare, gaming, and beyond.

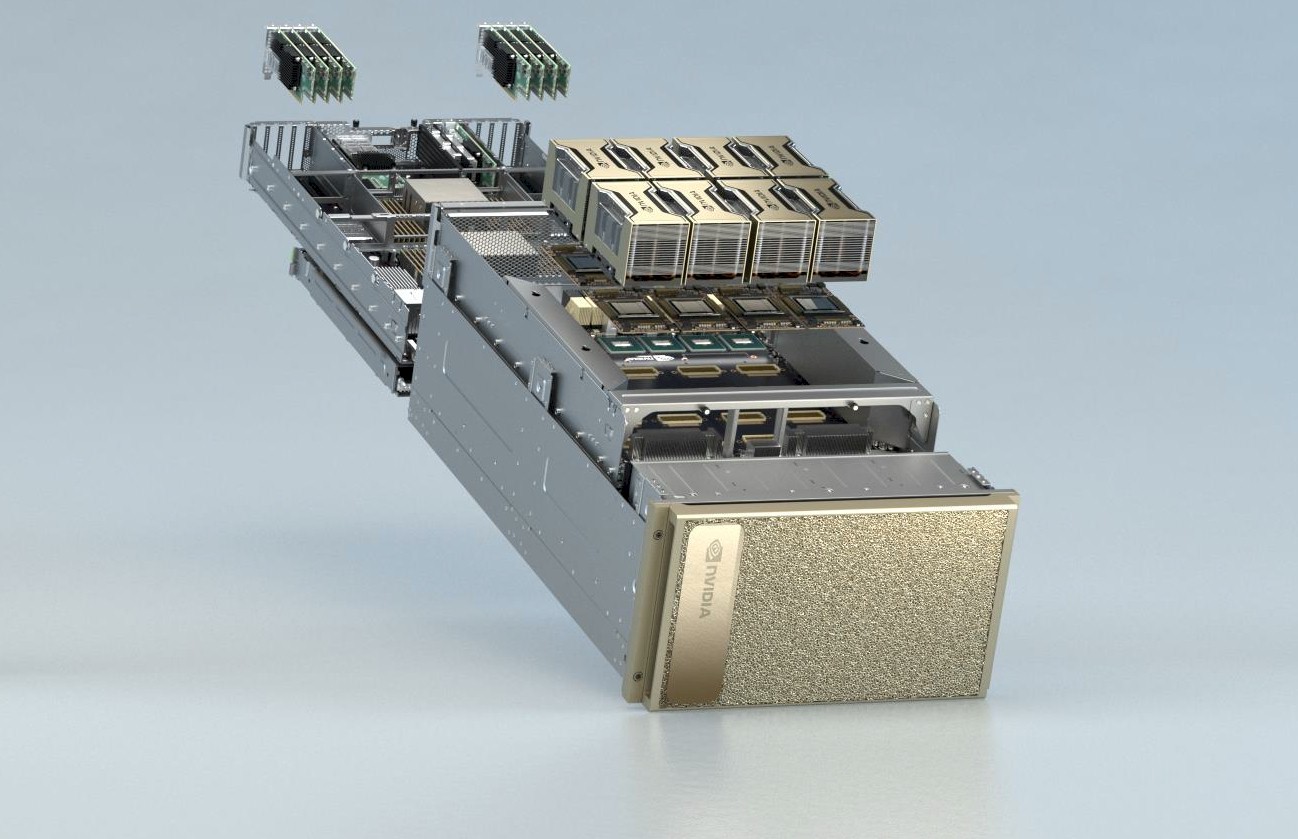

3. Graphics Processing Units

Graphics Processing Units (GPUs) have become indispensable in the realm of artificial intelligence (AI), playing a pivotal role in accelerating computational tasks essential for training and inference processes.

Unlike traditional CPUs, GPUs are specifically designed to handle parallel processing, making them highly efficient for the parallelized nature of many AI algorithms. The parallel architecture of GPUs allows them to simultaneously execute multiple operations, significantly speeding up the training of deep neural networks

The ZHAW's Centre for AI manages a high-end GPU cluster, including H-100, A-100, and V-100 GPUs from Nvidia.

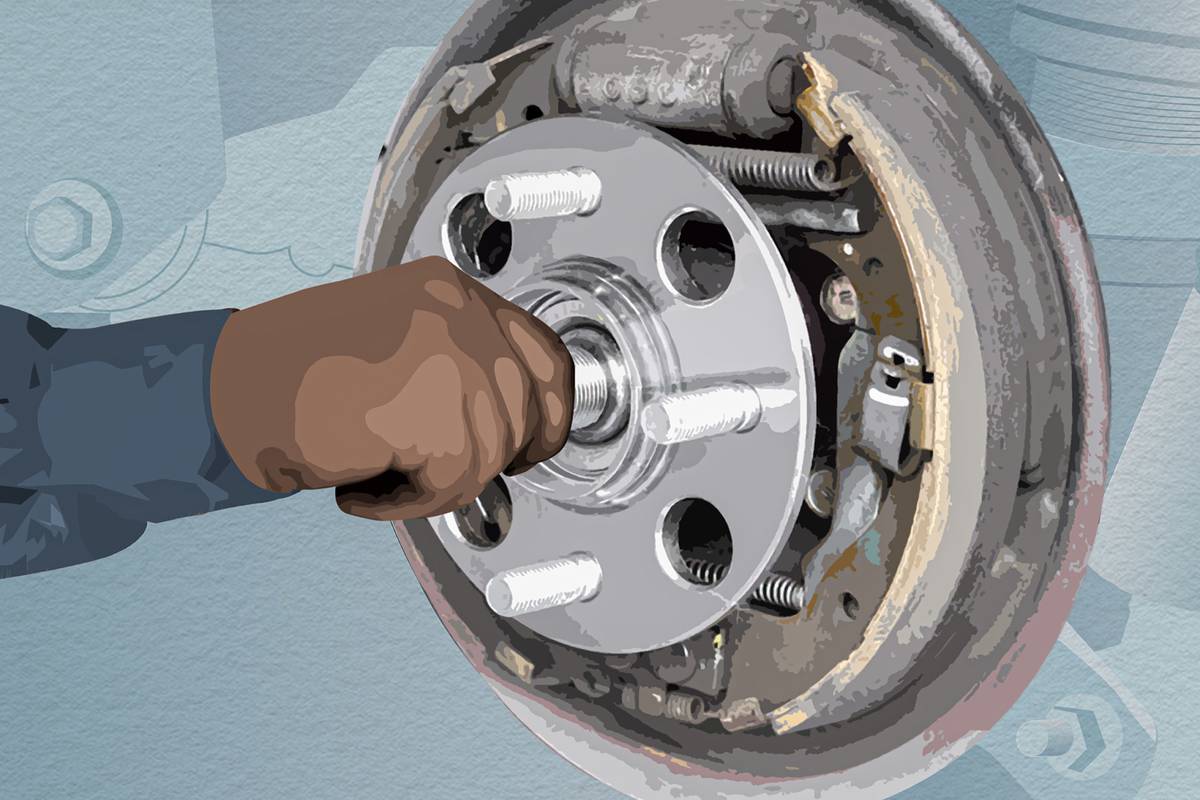

4. Predictive Maintenance

Predictive maintenance leverages the power of artificial intelligence to revolutionize the way industries manage and maintain their equipment.

By employing advanced algorithms and machine learning models, AI analyzes historical data, sensor readings, and operational parameters to predict potential equipment failures before they occur. This proactive approach allows organizations to schedule maintenance activities precisely when needed, reducing downtime, minimizing costly unplanned outages, and optimizing resource utilization.

One application of predictive maintenance is to analyze the driving and braking behavior in order to detect damage to the wheel bearing at an early stage.

5. Books

Our researchers have contributed to various literature to share their insights.

While there is an abundance of literature on Data Science, there is a lack of focus on its applied side. Data Science is a discipline that integrates various established research fields, and its essence lies in creating synergies to develop efficient data products for both academic and industrial projects. We aim to provide real-world insights into Data Science by showcasing its deployment in data-intensive projects, sharing experiences, and drawing lessons. The book "Applied Data Science" is positioned as complementary to theoretical textbooks, offering a "big picture", enabling readers to delve into practical Data Science applications, including collaborative efforts between academia and industry experts detailing technology transfer in real-world scenarios.

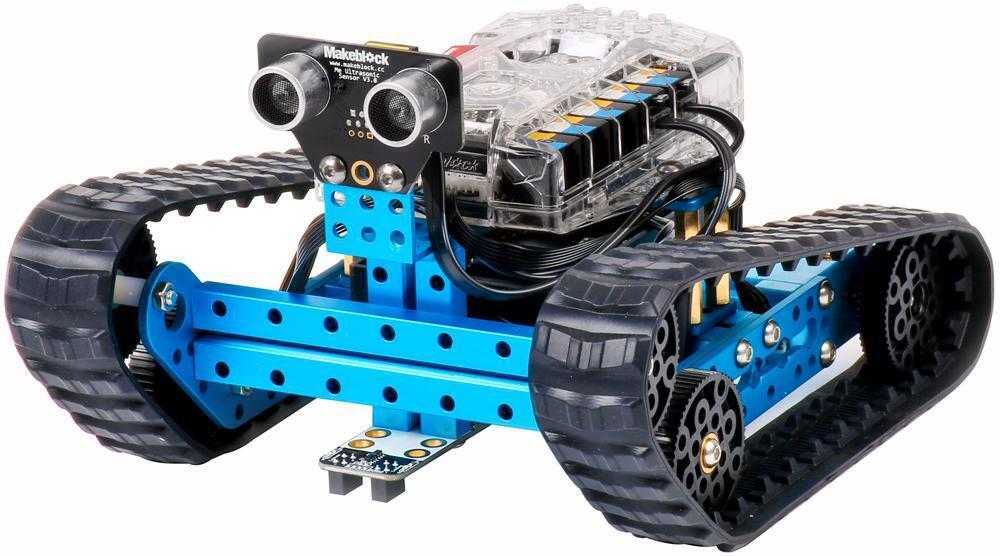

6. Autonomous Robots

We use AI to train autonomous robot system.

Artificial Intelligence plays a crucial role in enabling autonomous systems to navigate using cameras. Through computer vision algorithms, AI empowers these systems to interpret visual information captured by cameras and make informed decisions in real-time. The AI can analyze the camera feed to identify and understand the environment, recognize objects, detect obstacles, and estimate distances. This information is then processed to generate navigation commands, allowing the autonomous system to adjust its path and avoid collisions.

Gesture Control

Open and close your hand to navigate between pages.